AI offers the promise of being able to simplify every aspect of your smart home, but I'm skeptical that vision will be realized—at least not based on current technology. A smart home is a complex and unpredictable environment, and if anything, incorporating AI features increases the likelihood that things go wrong.

The promise of AI in the smart home

On the grand level, the dream of an AI-powered smart home is one where you can tell a voice assistant or chatbot how you want your home to work and then let it handle the technical bits. You might say "Have my lights in the living room come home when I arrive in the evening" and have the AI create the automation on your behalf, making sure the various components communicate with each other in the right way. This could make smart homes more accessible for people who don't think in if-then statements.

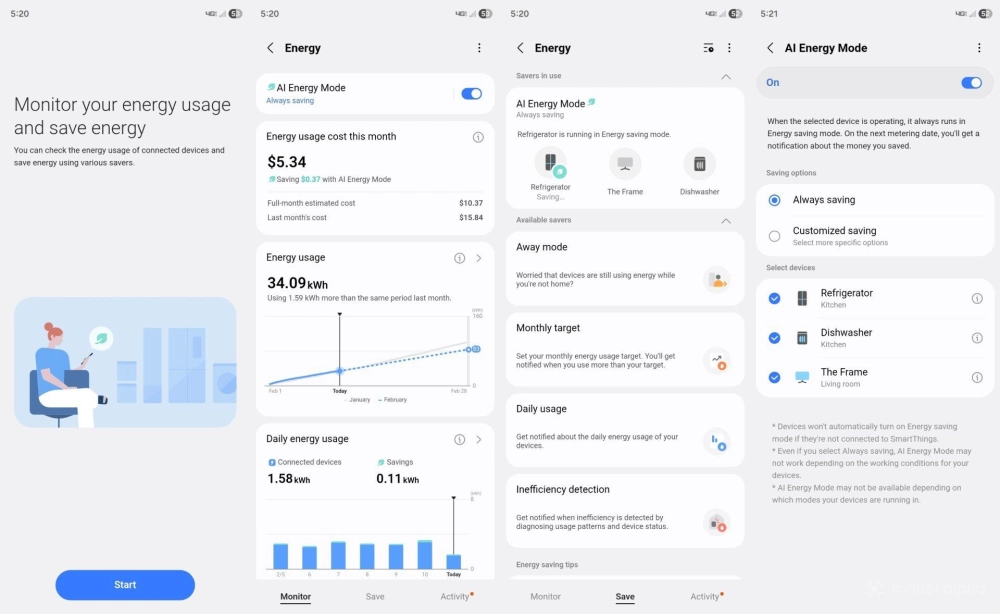

But that's a vision few smart home platforms are attempting to sell you today. Instead, when I open Samsung SmartThings, I am prompted for ways AI can enhance my experience by surfacing information that might be helpful, such as recurring notifications about how much energy my devices have used each day. This, for the record, hardly counts as AI—it's basic math. It doesn't take much in the way of artificial intelligence to look at the usage numbers for my devices that support energy tracking and add them together.

Besides, without energy tracking throughout most of my home, the information presented isn't actually useful.

SwitchBot's new AI hub can monitor camera feeds and uses AI to produce event summaries and detect various scenarios. The hub also adds support for OpenClaw, allowing you to interact with this open source autonomous AI agent without needing to install it yourself. Like Samsung's advertised AI features, OpenClaw can be used to analyze and report on the status of your smart home, but with far more depth and flexibility. It could even be used to proactively suggest and execute actions in your home on your behalf. Yet as a consumer product, it's far from ready. That leads to a bigger question—can you expect any AI based on large language models to be reliable at the job?

Where AI has already shown its limits

For the past decade, we've been able to control our smart homes using voice assistants like Alexa, Siri, and Google Assistant. These assistants detect certain phrases and require us to speak words in the correct order for them to understand, such as "turn on the [object] in the [place]."

Now the companies behind each of these voice assistants are in the process of replacing their older voice assistants with AI chatbots. Google doesn't want you to use Google Assistant, but Gemini. Samsung is working on a chatbot version of Bixby with the help of Perplexity. Amazon has shipped Alexa+ as a successor to Alexa.

Alexa+ has already demonstrated the weaknesses of using large language models in a smart home. Visit the Alexa Reddit page, and you won't have to search long to find people who reverted from Alexa+ to traditional Alexa. Complaints were prominent enough to make news, such as this story from TheStreet. Alexa is hardly unique in this regard. When Google rolled out Gemini for Home, the New York Times reported on its hallucinations.

Unpredictability is the nature of the beast with AI, and smart homes are a bigger challenge than answering questions, setting timers, and playing music. Now imagine adding the complexity of Matter on top, such as the issues people have encountered with Matter and IKEA’s latest smart home line.

Large language models are not ideal for controlling a smart home

While telling a traditional voice assistant to turn on the bedroom light will yield a consistent and predictable result, the same isn't true of a large language model, whose added "thinking" and "analysis" is actually a hindrance in this circumstance. Whereas a traditional voice assistant would just consistently interpret "bedroom light" as the name of the relevant light or the light in a room named "bedroom", a large language model instead asks itself what you meant by those same words and can come to a different conclusion each time about which bedroom is the right one and which light in the bedroom you might mean. If you have multiple bedrooms each with multiple lights, there is ample room for confusion. All of this thinking also takes time, increasing the latency between when you give a command and when you see the results.

To make matters worse, a smart home isn't a static dataset that's similar from one user to the next or used in consistent ways. A Tapo S505 smart switch might control a ceiling light, a ceiling fan, or an outdoor porch light. Then there are ambiguous words, like "light," which may refer to the light on a ceiling fan or the smart bulb in a desk lamp. If you say that it feels dusty in here, does that trigger the air purifier or the robot vacuum?

This isn't a problem that seems solvable via large language models. They are inherently unpredictable, and so are smart homes. This isn't a matter of iterating on the tech, and there are so many steps along the process where the AI can quietly fail. Relying on this type of AI inherently injects guesswork into an experience most of us would prefer to be reliable. When we want to turn on the lights, we expect the lights to actually turn on, and specifically the one we have in mind.

If you see AI advertised as a perk of a smart home platform or hub, it isn't necessarily an upgrade. The current approach to building a smart home involving apps, manually adding devices, and creating automations may get tedious, but it works. AI is better suited as an enhancement than a replacement, and even then, its utility is unproven.